Crowd-Guided Ensembles. How Can We Choreograph Crowd Workers for Video Segmentation?

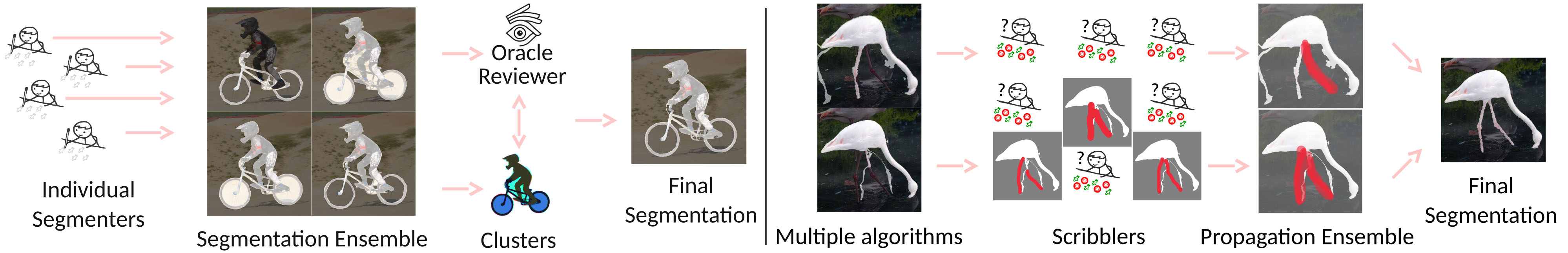

An illustration of our two proposed crowd-guided ensemble methods. Left: Our segmentation ensemble combines the results of multiple crowd workers through the guidance of an oracle reviewer. Right: Our propagation ensemble gathers the information about where multiple distinct algorithms fail from the accumulated scribbles of crowd workers and merges it into the result that incorporates the best of each algorithm.

Abstract

In this work, we propose two ensemble methods leveraging a crowd workforce to improve video annotation, with a focus on video object segmentation. Their shared principle is that while individual candidate results may likely be insufficient, they often complement each other so that they can be combined into something better than any of the individual results - the very spirit of collaborative working. For one, we extend a standard polygon-drawing interface to allow workers to annotate negative space, and combine the work of multiple workers instead of relying on a single best one as commonly done in crowdsourced image segmentation. For the other, we present a method to combine multiple automatic propagation algorithms with the help of the crowd. Such combination requires an understanding of where the algorithms fail, which we gather using a novel coarse scribble video annotation task. We evaluate our ensemble methods, discuss our design choices for them, and make our web-based crowdsourcing tools and results publicly available.

Paper

|

|

BibTeX

author={Alexandre Kaspar and Genevi\`eve Patterson and Changil Kim and Ya\u{g}{\i}z Aksoy and Wojciech Matusik and Mohamed Elgharib},

title={Crowd-Guided Ensembles: How Can We Choreograph Crowd Workers for Video Segmentation?},

booktitle={Proc. ACM CHI},

year={2018},

}

Data

| Segmentation results, interfaces and videos (80MB) |

Related Publications