DynaPix - Normal Map Pixelization for Dynamic Lighting

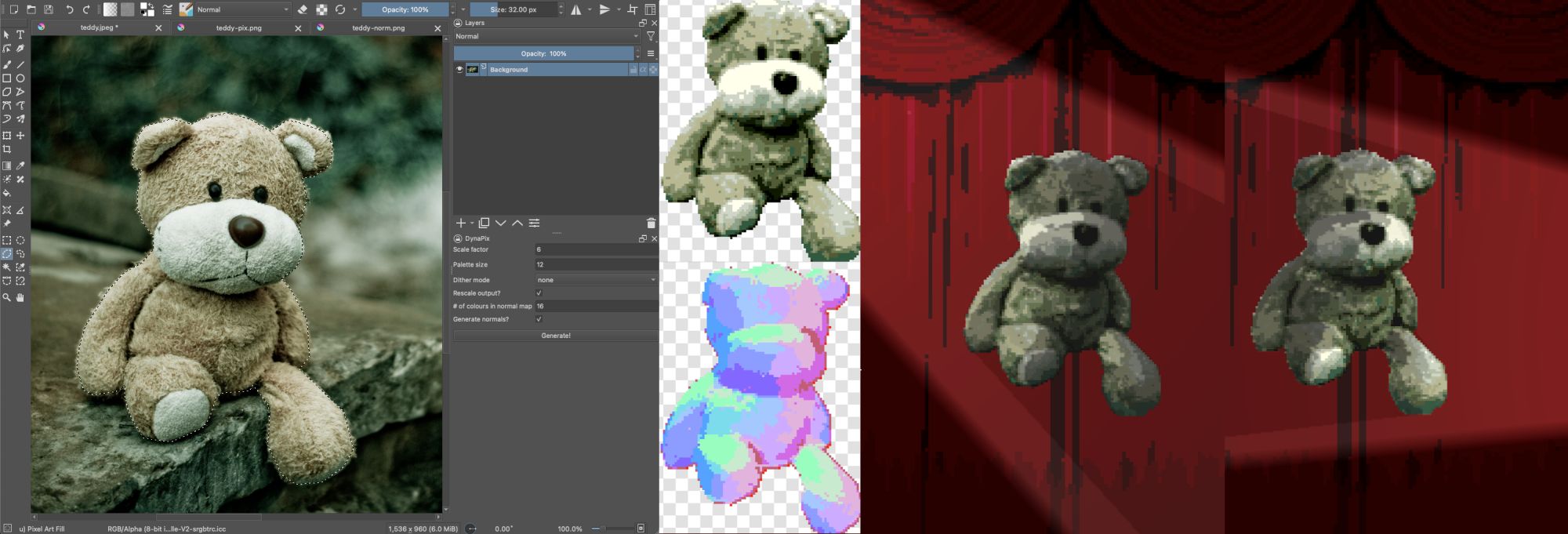

DynaPix is a Krita extension that uses an existing pixelization engine and neural network surface normal estimator to generate pixelated images and their corresponding normal maps. These pixelized representations can be easily integrated into modern game development engines for dynamic relighting.

Abstract

This work introduces DynaPix, a Krita extension that automatically generates pixelated images and surface normals from an input image. DynaPix is a tool that aids pixel artists and game developers more efficiently develop 8-bit style games and bring them to life with dynamic lighting through normal maps that can be used in modern game engines such as Unity. The extension offers artists a degree of flexibility as well as allows for further refinements to generated artwork. Powered by out of the box solutions, DynaPix is a tool that seamlessly integrates in the artistic workflow.

This work was developed by Gerardo and Denys as an undergraduate class project for CMPT 461 - Computational Photography at SFU.

Krita Extension and Implementation

GitHub Repository for CMPT 461 - Computational Photography

Paper

|

|

Video

BibTeX

author={Gerardo Gandeaga and Denys Iliash and Chris Careaga and Ya\u{g}{\i}z Aksoy},

title={Dyna{P}ix: Normal Map Pixelization for Dynamic Lighting},

booktitle={SIGGRAPH Posters},

year={2022},

}

More posters from CMPT 461/769: Computational Photography