Datamoshing with Optical Flow

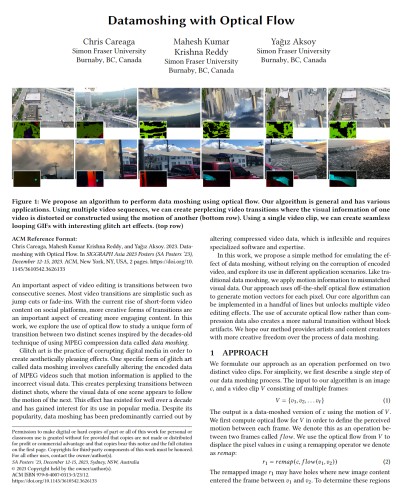

We propose an algorithm to perform data moshing using optical flow. Our algorithm is general and has various applications. Using multiple video sequences, we can create perplexing video transitions where the visual information of one video is distorted or constructed using the motion of another (bottom row). Using a single video clip, we can create seamless looping GIFs with interesting glitch art effects. (top row).

Abstract

We propose a simple method for emulating the effect of data moshing, without relying on the corruption of encoded video, and explore its use in different application scenarios. Like traditional data moshing, we apply motion information to mismatched visual data. Our approach uses off-the-shelf optical flow estimation to generate motion vectors for each pixel. Our core algorithm can be implemented in a handful of lines but unlocks multiple video editing effects. The use of accurate optical flow rather than compression data also creates a more natural transition without block artifacts. We hope our method provides artists and content creators with more creative freedom over the process of data moshing.

This work was developed by Chris and Mahesh as a class project for CMPT 769 - Computational Photography at SFU.

Paper

|

|

Video (coming soon)

BibTeX

author={Chris Careaga and Mahesh Kumar Krishna Reddy and Ya\u{g}{\i}z Aksoy},

title={Datamoshing with Optical Flow},

booktitle={SIGGRAPH Asia Posters},

year={2023},

}

More posters from CMPT 461/769: Computational Photography