Designing Effective Inter-Pixel Information Flow for Natural Image Matting

Extended version on arXiv

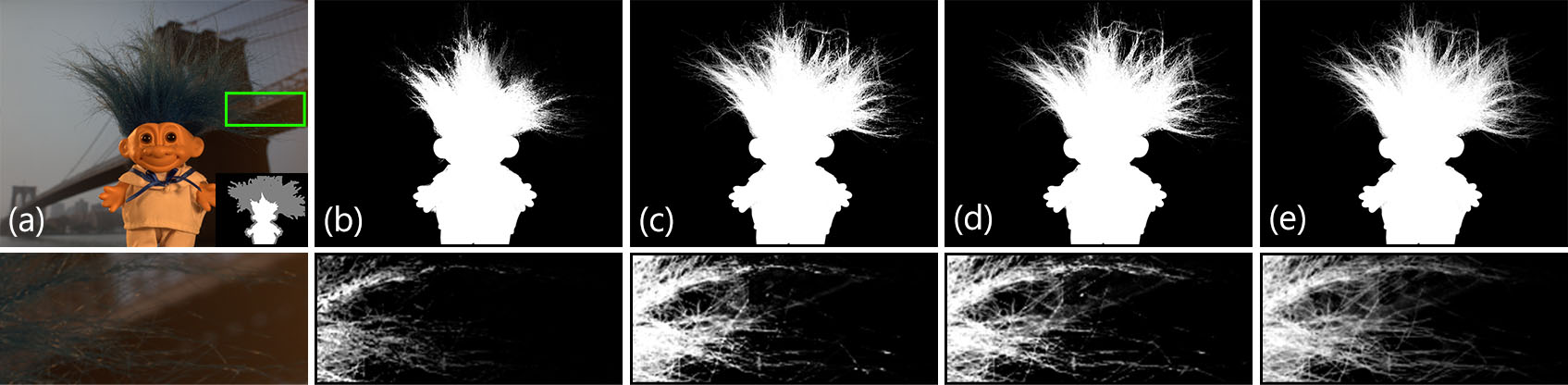

For an input image and a trimap (a), we define several forms of information flow inside the image. We begin with color-mixture flow (b), then add direct channels of information flow from known to unknown regions (c), and let effective share of information inside the unknown region (d) to increase the matte quality in challenging regions. We finally add local information flow to get our spatially smooth result (e).

Abstract

We present a novel, purely affinity-based natural image matting algorithm. Our method relies on carefully defined pixel-to-pixel connections that enable effective use of information available in the image. We control the information flow from the known-opacity regions into the unknown region, as well as within the unknown region itself, by utilizing multiple definitions of pixel affinities. Among other forms of information flow, we introduce color-mixture flow, which builds upon local linear embedding and effectively encapsulates the relation between different pixel opacities. Our resulting novel linear system formulation can be solved in closed-form and is robust against several fundamental challenges of natural matting such as holes and remote intricate structures. Our evaluation using the alpha matting benchmark suggests a significant performance improvement over the current methods. While our method is primarily designed as a standalone matting tool, we show that it can also be used for regularizing mattes obtained by sampling-based methods. We extend our formulation to layer color estimation and show that the use of multiple channels of flow increases the layer color quality. We also demonstrate our performance in green-screen keying and further analyze the characteristics of the affinities used in our method.

Manuscript

|

|

BibTeX

author={Aksoy, Ya\u{g}{\i}z and Ayd{\i}n, Tun\c{c} Ozan and Pollefeys, Marc},

booktitle={Proc. CVPR},

title={Designing Effective Inter-Pixel Information Flow for Natural Image Matting},

year={2017},

}

author={Aksoy, Ya\u{g}{\i}z and Ayd{\i}n, Tun\c{c} Ozan and Pollefeys, Marc},

journal = {\tt arXiv:1707.05055 [cs.CV]},

title={Designing Effective Inter-Pixel Information Flow for Natural Image Matting},

year={2017},

}

Benchmark results

Implementation

We can not release the original source code. However, a reimplementation of the method is available as a part of the affinity-based matting toolbox. While this reimplementation does not give the same results as the original implementation, we recommend its use in comparisons and extensions.

Related Publications

|

PhD Thesis, ETH Zurich, 2019

|