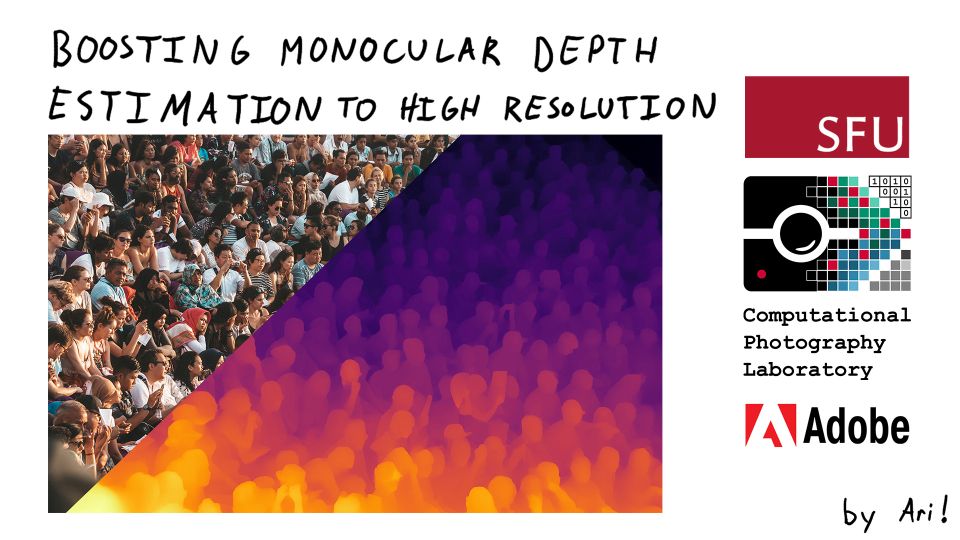

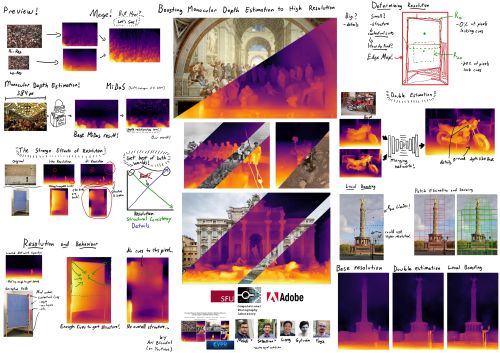

Scale-Invariant Monocular Depth Estimation via SSI Depth

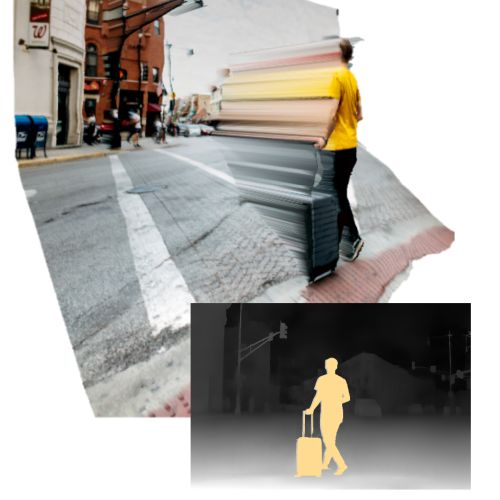

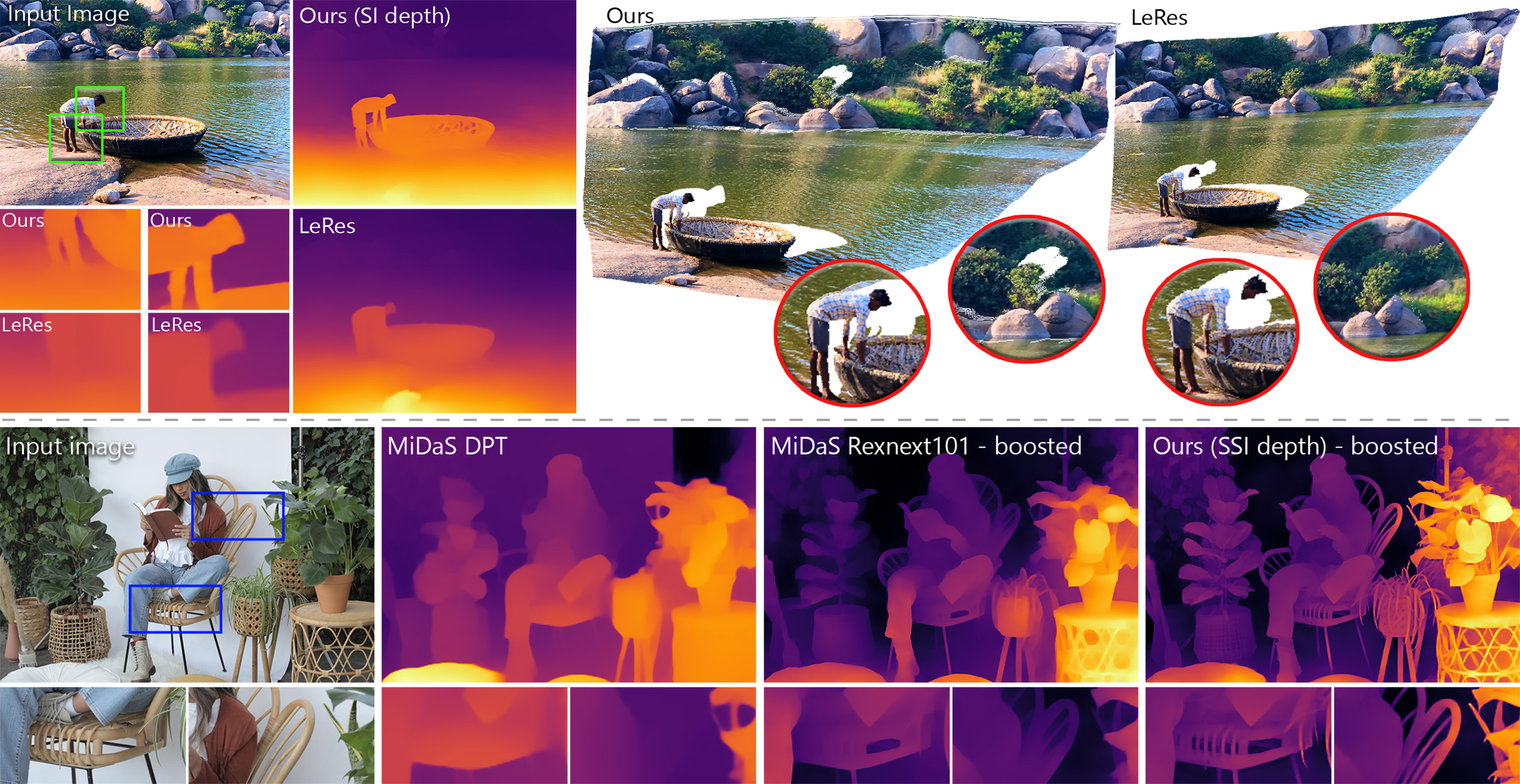

(top) We propose a framework to generate high resolution scale-invariant (SI) depth from a single image that can be projected to geometrically accurate point clouds of complex scenes. Our generalization ability comes from formulating SI depth estimation with SSI inputs. (bottom) For this purpose, we introduce a novel scale and shift invariant (SSI) depth estimation formulation that excels in generating intricate details.

Abstract

Existing methods for scale-invariant monocular depth estimation (SI MDE) often struggle due to the complexity of the task, and limited and non-diverse datasets, hindering generalizability in real-world scenarios. This is while shift-and-scale-invariant (SSI) depth estimation, simplifying the task and enabling training with abundant stereo datasets achieves high performance. We present a novel approach that leverages SSI inputs to enhance SI depth estimation, streamlining the network's role and facilitating in-the-wild generalization for SI depth estimation while only using a synthetic dataset for training. Emphasizing the generation of high-resolution details, we introduce a novel sparse ordinal loss that substantially improves detail generation in SSI MDE, addressing critical limitations in existing approaches. Through in-the-wild qualitative examples and zero-shot evaluation we substantiate the practical utility of our approach in computational photography applications, showcasing its ability to generate highly detailed SI depth maps and achieve generalization in diverse scenarios.

Implementation

Video

SIGGRAPH Presentation

Paper

|

|

Poster

|

BibTeX

author={S. Mahdi H. Miangoleh and Mahesh Reddy and Ya\u{g}{\i}z Aksoy},

title={Scale-Invariant Monocular Depth Estimation via SSI Depth},

booktitle={Proc. SIGGRAPH},

year={2024},

}

Related Publications