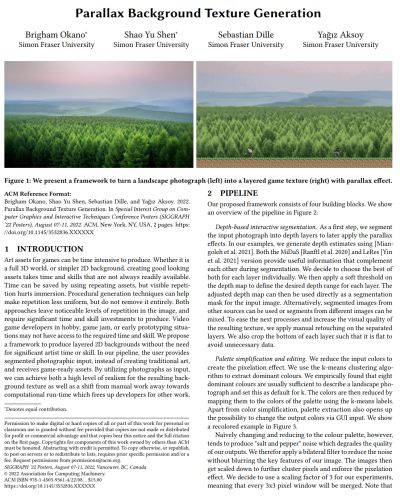

Parallax Background Texture Generation

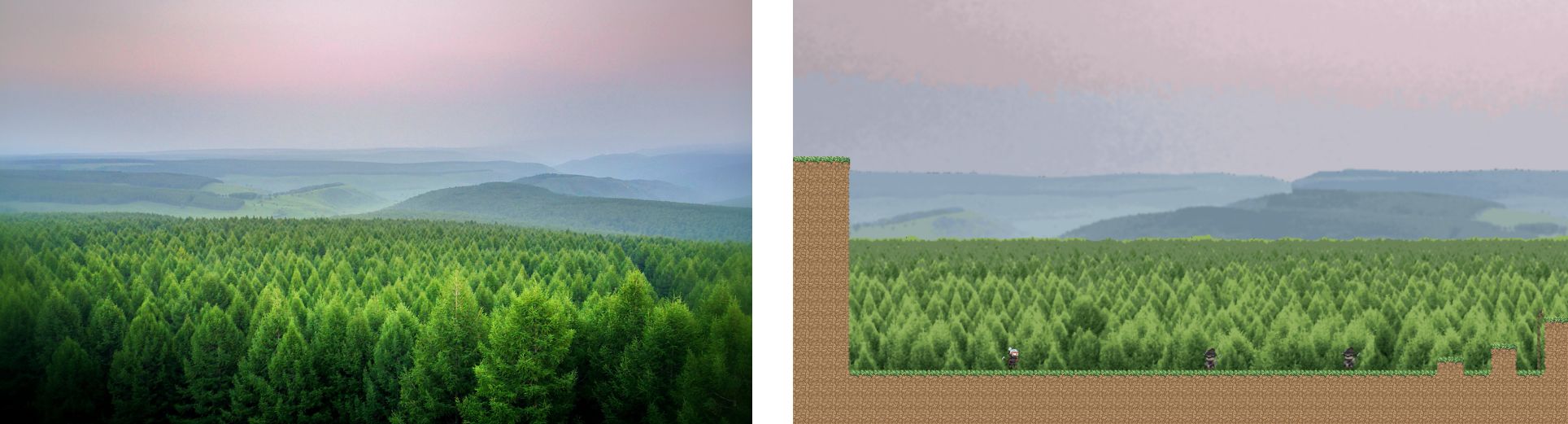

We present a framework to turn a landscape photograph (left) into a layered game texture (right) with parallax effect.

Abstract

Art assets for games can be time intensive to produce. Whether it is a full 3D world, or simpler 2D background, creating good looking assets takes time and skills that are not always readily available. Time can be saved by using repeating assets, but visible repetition hurts immersion. Procedural generation techniques can help make repetition less uniform, but do not remove it entirely. Both approaches leave noticeable levels of repetition in the image, and require significant time and skill investments to produce. Video game developers in hobby, game jam, or early prototyping situations may not have access to the required time and skill. We propose a framework to produce layered 2D backgrounds without the need for significant artist time or skill. In our pipeline, the user provides segmented photographic input, instead of creating traditional art, and receives game-ready assets. By utilizing photographs as input, we can achieve both a high level of realism for the resulting background texture as well as a shift from manual work away towards computational run-time which frees up developers for other work.

This work was developed by Brigham and Shao Yu as an undergraduate class project for CMPT 461 - Computational Photography at SFU.

Implementation

GitHub Repository for CMPT 461 - Computational Photography

Paper

|

|

Video

BibTeX

author={Brigham Okano and Shao Yu Shen and Sebastian Dille and Ya\u{g}{\i}z Aksoy},

title={Parallax Background Texture Generation},

booktitle={SIGGRAPH Posters},

year={2022},

}

More posters from CMPT 461/769: Computational Photography