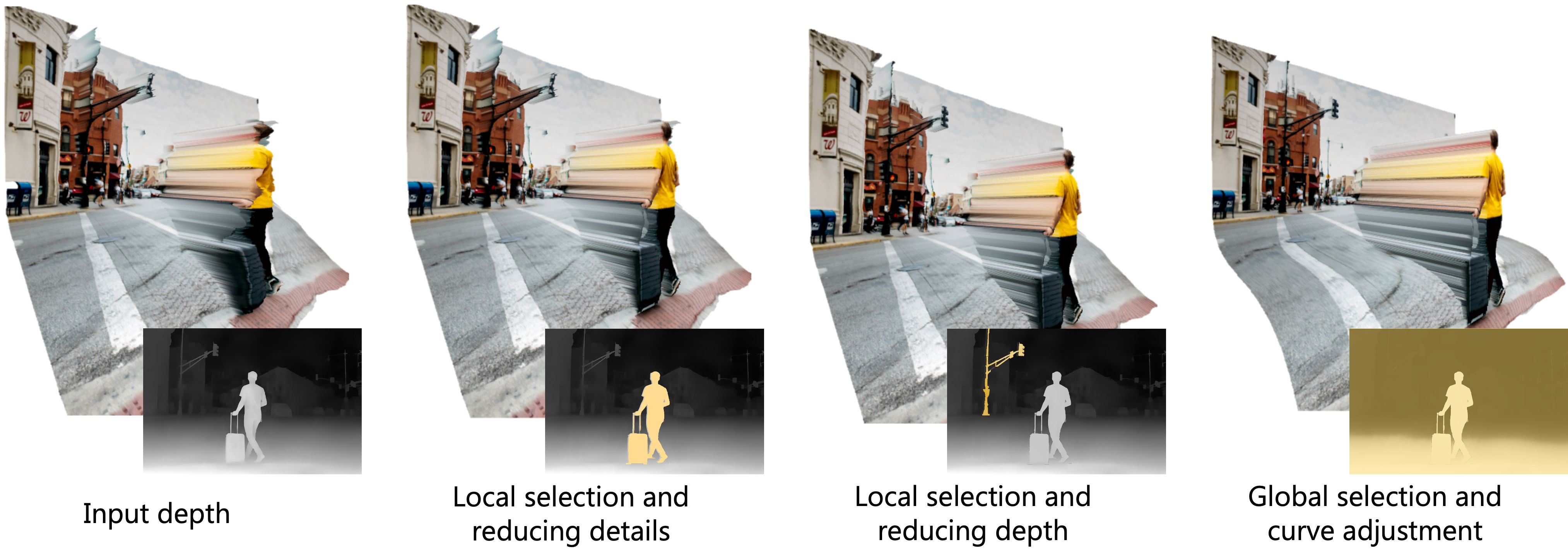

Interactive Editing of Monocular Depth

We propose an interactive web-based depth editing and visualization tool to perform local and global depth editing operations. From left to right, we apply iterative edits using our tool on the input depth to refine its 3D geometric properties.

Abstract

Recent advances in computer vision have made 3D structure-aware editing of still photographs a reality. Such computational photography applications use a depth map that is automatically generated by monocular depth estimation methods to represent the scene structure. In this work, we present a lightweight, web-based interactive depth editing and visualization tool that adapts low-level conventional image editing operations for geometric manipulation to enable artistic control in the 3D photography workflow. Our tool provides real-time feedback on the geometry through a 3D scene visualization to make the depth map editing process more intuitive for artists. Our web-based tool is open-source and platform-independent to support wider adoption of 3D photography techniques in everyday digital photography.

Interface and Implementation

Interactive depth editing interface

Paper

|

|

Video

BibTeX

author={Obumneme Stanley Dukor and S. Mahdi H. Miangoleh and Mahesh Kumar Krishna Reddy and Long Mai and Ya\u{g}{\i}z Aksoy},

title={Interactive Editing of Monocular Depth},

booktitle={SIGGRAPH Posters},

year={2022},

}

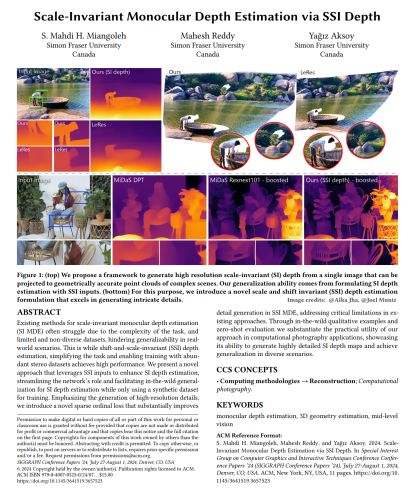

Related Publications