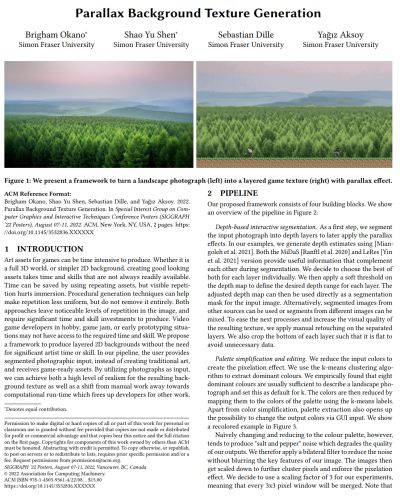

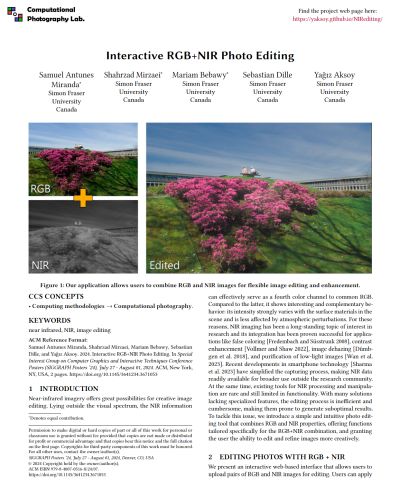

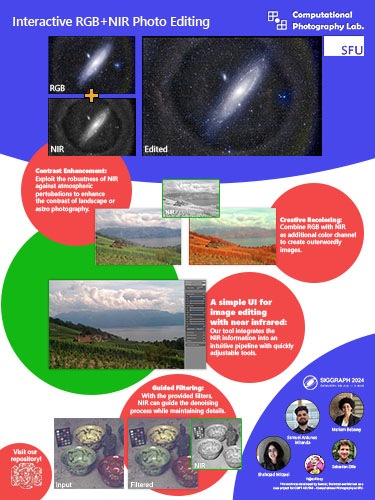

Interactive RGB+NIR Photo Editing

|  |  |  |  |

| Samuel Antunes Miranda* | Shahrzad Mirzaei* | Mariam Bebawy* | Sebastian Dille | Yağız Aksoy |

We introduce a simple and intuitive photo editing tool that combines RGB and NIR properties, offering functions tailored specifically for the RGB+NIR combination, and granting the user the ability to edit and refine images more creatively.

Abstract

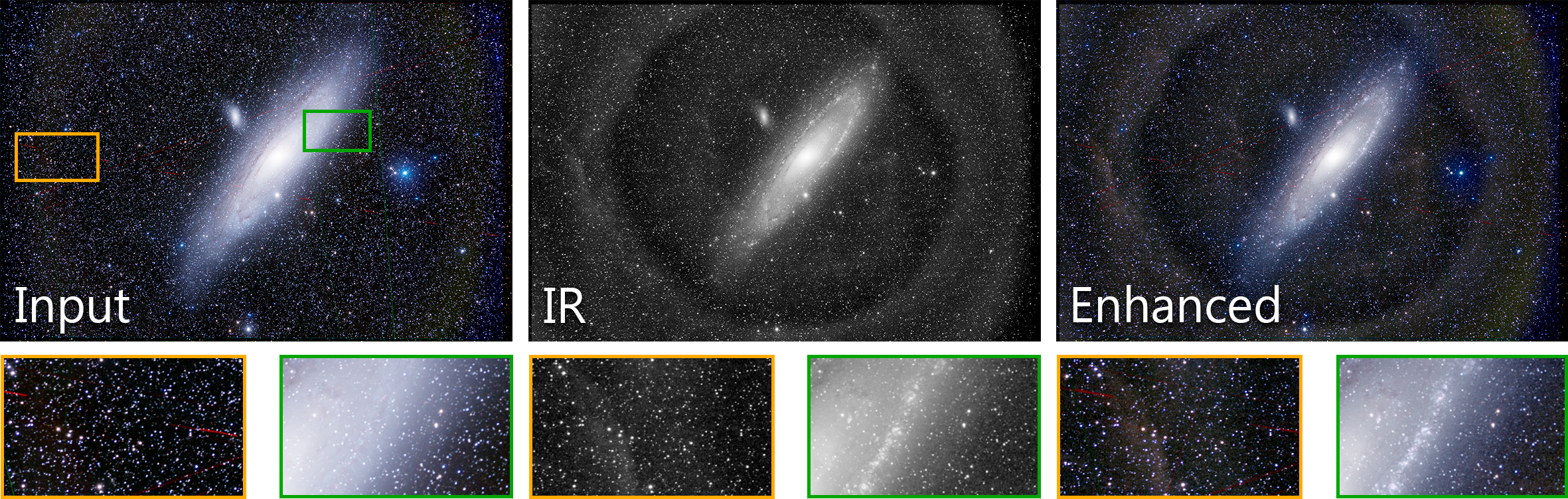

Near-infrared imagery offers great possibilities for creative image editing. Lying outside the visual spectrum, the NIR information can effectively serve as a fourth color channel to common RGB. Compared to the latter, it shows interesting and complementary behavior: its intensity strongly varies with the surface materials in the scene and is less affected by atmospheric perturbations. For these reasons, NIR imaging has been a long-standing topic of interest in research and its integration has been proven successful for applications like false coloring, contrast enhancement, image dehazing, and purification of low-light images. Recent developments in smartphone technology have simplified the capturing process, making NIR data readily available for broader use outside the research community. At the same time, existing tools for NIR processing and manipulation are rare and still limited in functionality. With many solutions lacking specialized features, the editing process is inefficient and cumbersome, making them prone to generate suboptimal results. To tackle this issue, we introduce a simple and intuitive photo editing tool that combines RGB and NIR properties, offering functions tailored specifically for the RGB+NIR combination, and granting the user the ability to edit and refine images more creatively.

This work was developed by Samuel, Shahrzad, and Mariam as a class project for CMPT 461/769 - Computational Photography at SFU.

Implementation

GitHub Repository for CMPT 461/769 - Computational Photography

Paper

|

|

Video

BibTeX

author={Samuel Antunes Miranda and Shahrzad Mirzaei and Mariam Bebawy and Sebastian Dille and Ya\u{g}{\i}z Aksoy},

title={Interactive RGB+NIR Photo Editing},

booktitle={SIGGRAPH Posters},

year={2024},

}

More posters from CMPT 461/769: Computational Photography