Intrinsic Image Decomposition via Ordinal Shading

Patent pending

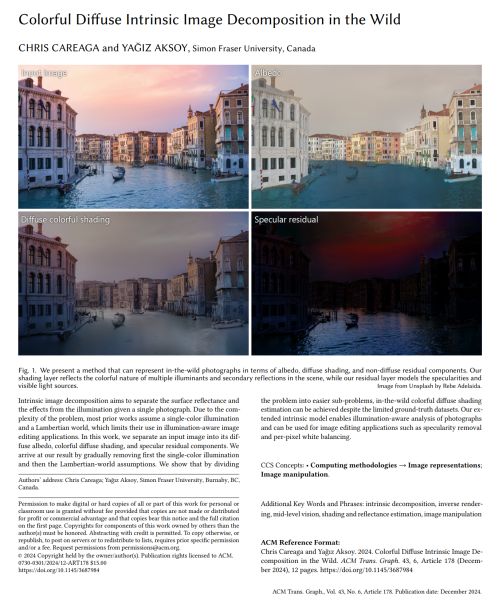

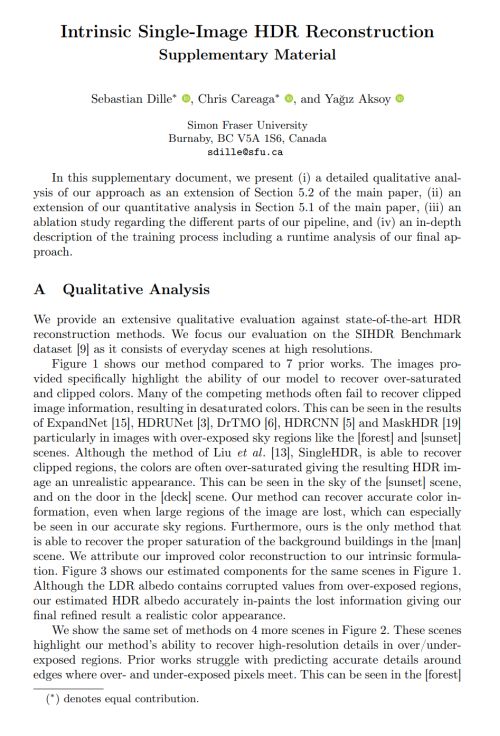

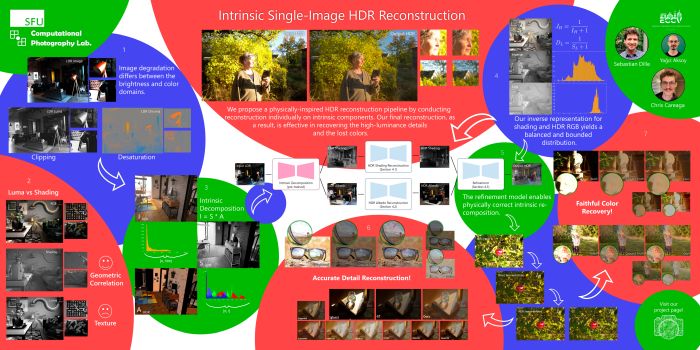

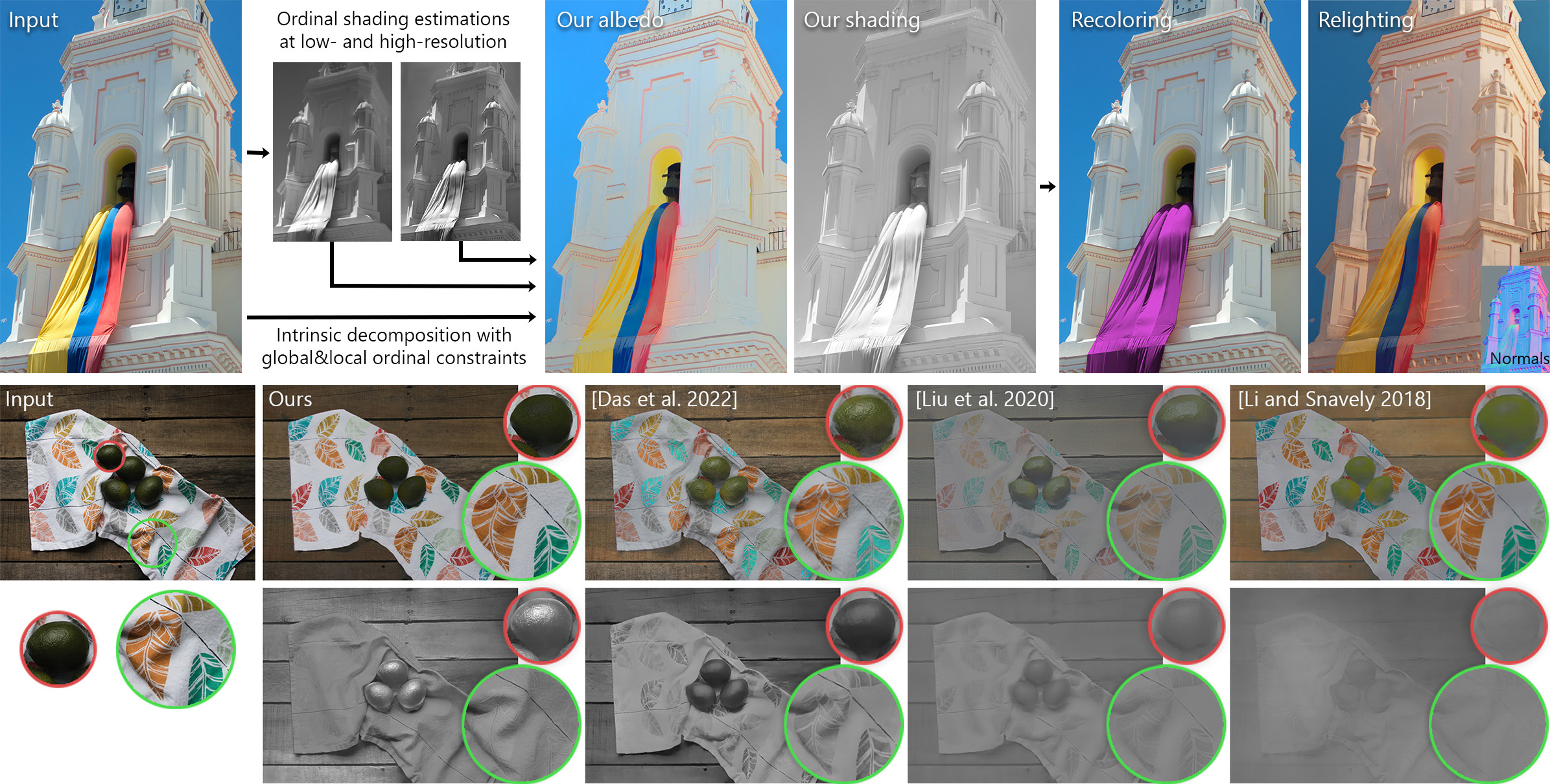

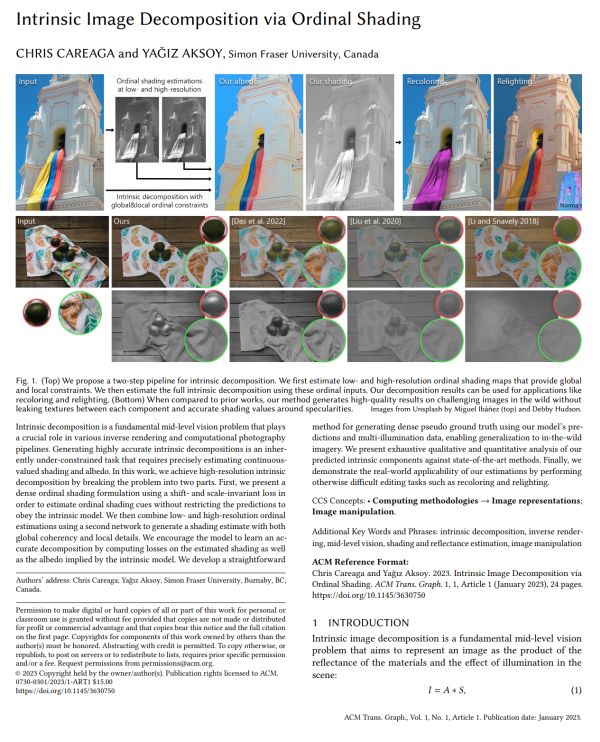

(Top) We propose a two-step pipeline for intrinsic decomposition. We first estimate low- and high-resolution ordinal shading maps that provide global and local constraints. We then estimate the full intrinsic decomposition using these ordinal inputs. Our decomposition results can be used for applications like recoloring and relighting. (Bottom) When compared to prior works, our method generates high-quality results on challenging images in the wild without leaking textures between each component and accurate shading values around specularities.

Abstract

Intrinsic decomposition is a fundamental mid-level vision problem that plays a crucial role in various inverse rendering and computational photography pipelines. Generating highly accurate intrinsic decompositions is an inherently under-constrained task that requires precisely estimating continuous-valued shading and albedo. In this work, we achieve high-resolution intrinsic decomposition by breaking the problem into two parts. First, we present a dense ordinal shading formulation using a shift- and scale-invariant loss in order to estimate ordinal shading cues without restricting the predictions to obey the intrinsic model. We then combine low- and high-resolution ordinal estimations using a second network to generate a shading estimate with both global coherency and local details. We encourage the model to learn an accurate decomposition by computing losses on the estimated shading as well as the albedo implied by the intrinsic model. We develop a straightforward method for generating dense pseudo ground truth using our model’s predictions and multi-illumination data, enabling generalization to in-the-wild imagery. We present exhaustive qualitative and quantitative analysis of our predicted intrinsic components against state-of-the-art methods. Finally, we demonstrate the real-world applicability of our estimations by performing otherwise difficult editing tasks such as recoloring and relighting.

Implementation

Video

Paper

|

|

Dataset

|

We present the first dense, real-world, and large-scale dataset for intrinsic image decomposition. Our dataset was derived from the Multi-Illumination Dataset by Murmann et al. and consists of 1000 scenes under 25 different illuminations each, with 1000 unique albedo maps and 25.000 image - RGB shading pairs.

This dataset accompanies our ACM Trans. Graph. 2023 paper.

@ARTICLE{careagaIntrinsic,

author={Chris Careaga and Ya\u{g}{\i}z Aksoy}, title={Intrinsic Image Decomposition via Ordinal Shading}, journal={ACM Trans. Graph.}, year={2023}, } |

Poster

|

BibTeX

author={Chris Careaga and Ya\u{g}{\i}z Aksoy},

title={Intrinsic Image Decomposition via Ordinal Shading},

journal={ACM Trans. Graph.},

year={2023},

volume = {43},

number = {1},

articleno = {12},

numpages = {24},

}

Media Release

|

License

The methodology presented in this work is safeguarded under intellectual property protection. For inquiries regarding licensing opportunities, kindly reach out to SFU Technology Licensing Office <tlo_dir ατ sfu δøτ ca> and Dr. Yağız Aksoy <yagiz ατ sfu δøτ ca>.

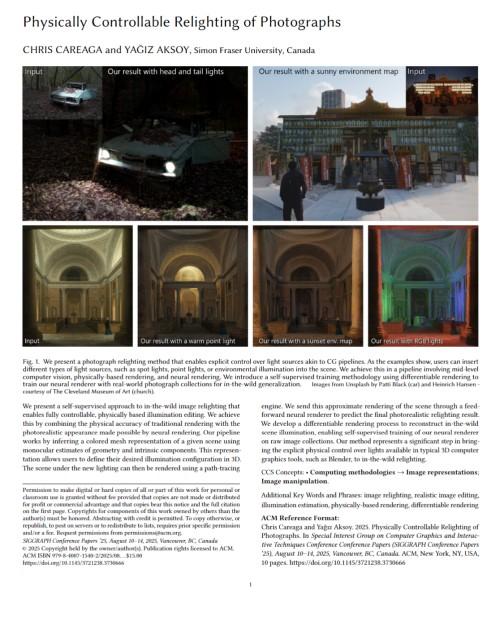

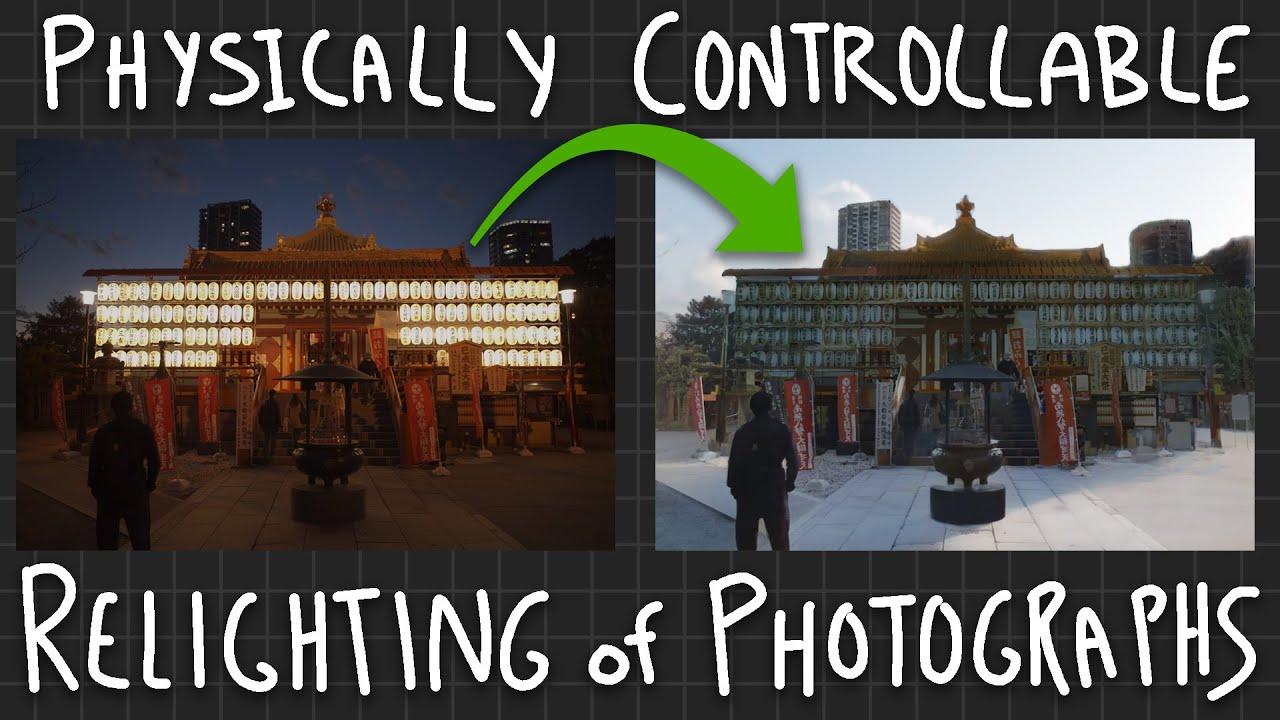

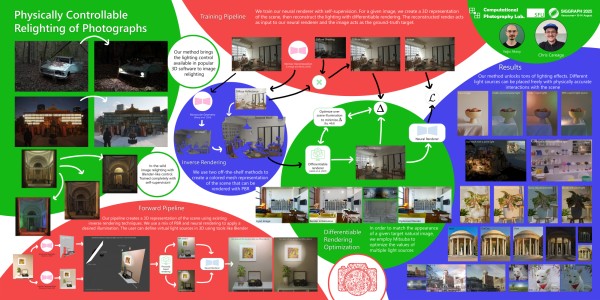

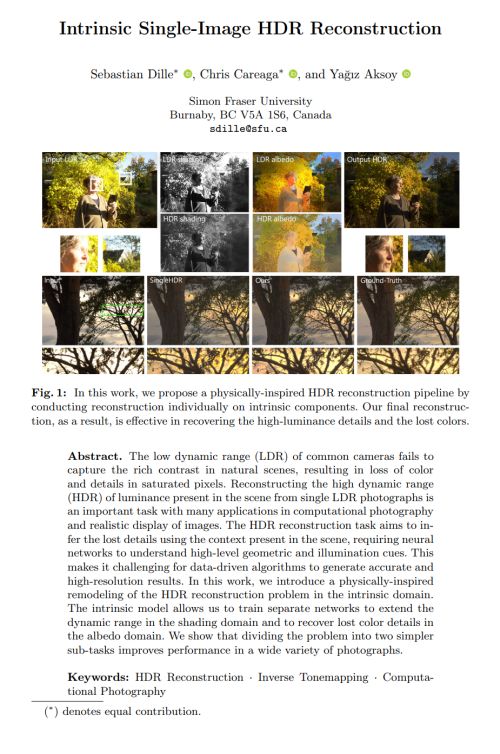

Related Publications