Publications

You can check out our Research page for a description of our research themes and our Media page for a more non-technical description of our work.

Major venues

Minor venues

Published CMPT 461 / 769 projects

|

Physically-Based Compositing of 2D Graphics

SIGGRAPH Posters, 2025 - coming soon

We propose an interactive pipeline that enables the seamless integration of a 2D logo into a target image, adapting to the surface geometry and lighting conditions of the scene to ensure realistic appearance.

@INPROCEEDINGS{traceyDiaconuCompositing,

author={Tyrus Tracey and Stefan Diaconu and Sebastian Dille and S. Mahdi H. Miangoleh and Ya\u{g}{\i}z Aksoy}, title={Physically-Based Compositing of {2D} Graphics}, booktitle={SIGGRAPH Posters}, year={2025}, } |

|

Interactive Object Insertion with Differentiable Rendering

SIGGRAPH Posters, 2025 - coming soon

We develop an object insertion pipeline and interface that enables iterative editing of illumination-aware composite images. Our pipeline leverages off-the-shelf computer vision methods and differentiable rendering to reconstruct a 3D representation of a given scene. Users can add 3D objects and render them with physically accurate lighting effects.

@INPROCEEDINGS{pengTairaCompositing,

author={Weikun Peng and Sota Taira and Chris Careaga and Ya\u{g}{\i}z Aksoy}, title={Interactive Object Insertion with Differentiable Rendering}, booktitle={SIGGRAPH Posters}, year={2025}, } |

Theses

|

PhD Thesis, ETH Zurich, 2019

|

Other works by Yağız

|

AR Museum: A Mobile Augmented Reality Application for Interactive Painting Recoloring

International Conference on Game and Entertainment Technologies, 2017

We present a mobile augmented reality application that allows its users to modify colors of paintings via simple touch

interactions. Our method is intended for museums and art exhibitions and aims to provide an entertaining way for

interacting with paintings in a non-intrusive manner. Plausible color edits are achieved by utilizing a set of layers with

corresponding alpha channels, which needs to be generated for each individual painting in a pre-processing step.

Manually performing such a layer decomposition is a tedious process and makes the entire system infeasible for most

practical use cases. In this work, we propose the use of a fully automatic soft color segmentation algorithm for content

generation for such an augmented reality application. This way, we significantly reduce the amount of manual labor

needed for deploying our system and thus make our system feasible for real-world use.

@INPROCEEDINGS{armuseum,

author={Mattia Ryffel and Fabio Z\"und and Ya\u{g}{\i}z Aksoy and Alessia Marra and Maurizio Nitti and Tun\c{c} Ozan Ayd{\i}n and Bob Sumner}, title={AR Museum: A Mobile Augmented Reality Application for Interactive Painting Recoloring}, booktitle={International Conference on Game and Entertainment Technologies}, year={2017}, } |

|

Impact of transrectal prostate needle biopsy on erectile function: Results of power Doppler ultrasonography of the prostate

The Kaohsiung Journal of Medical Sciences, 2014

We evaluated the impact of transrectal prostate needle biopsy (TPNB) on erectile function and on the prostate and bilateral neurovascular bundles using power Doppler ultrasonography imaging of the prostate. The study consisted of 42 patients who had undergone TPNB. Erectile function was evaluated prior to the biopsy, and in the 3rd month after the biopsy using the first five-item version of the International Index of Erectile Function (IIEF-5). Prior to and 3 months after the biopsy, the resistivity index of the prostate parenchyma and both neurovascular bundles was measured. The mean age of the men was 64.2 (47–78) years. Prior to TPNB, 10 (23.8%) patients did not have erectile dysfunction (ED) and 32 (76.2%) patients had ED. The mean IIEF-5 score was 20.8 (range: 2–25) prior to the biopsies, and the mean IIEF-5 score was 17.4 (range: 5–25; p < 0.001) after 3 months. For patients who were previously potent in the pre-biopsy period, the ED rate was 40% (n = 4/10) at the 3rd month evaluation. In these patients, all the resistivity index values were significantly decreased. Our results showed that TPNB may lead to an increased risk of ED. The presence of ED in men after TPNB might have an organic basis.

@ARTICLE{Tuncel2014,

title = "Impact of transrectal prostate needle biopsy on erectile function: Results of power Doppler ultrasonography of the prostate ", author = "Altug Tuncel and Ugur Toprak and Melih Balci and Ersin Koseoglu and Yagiz Aksoy and Alp Karademir and Ali Atan", journal = "The Kaohsiung Journal of Medical Sciences ", volume = "30", number = "4", pages = "194 - 199", year = "2014",} |

Patents

|

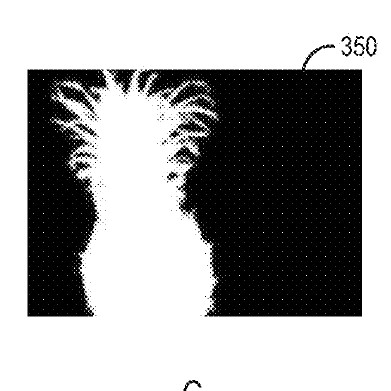

Systems and Methods for Image Decomposition

|

|

Systems and Methods for Image Relighting and Image Compositing

|

|

US Patent US11615555B2, granted 2023

|

|

US Patent US10650524B2, granted 2020

|

|

US Patent US10037615B2, granted 2018

|